In the early days of search engine optimization, a simple formula promised ranking success: calculate the perfect percentage of keyword repetition, sprinkle that exact ratio throughout your content, and watch your pages rise in search results. This metric keyword density dominated SEO strategy for years, spawning countless tools and guidelines about ideal percentages. However, modern search algorithms have evolved far beyond simple keyword counting, rendering keyword density largely irrelevant and potentially harmful when pursued obsessively. Understanding why keyword density no longer matters and what has replaced it helps you optimize content effectively for today’s sophisticated search engines.

Navigate This Post

What Is Keyword Density?

Keyword density is the percentage of times a keyword appears on a page compared to the total word count. The formula calculates how frequently a target keyword or phrase appears relative to all words on the page, expressed as a percentage:

Keyword Density = (Number of Keyword Occurrences / Total Words) × 100

For example, if your target keyword appears 10 times in a 1,000-word article, the keyword density would be 1%. If it appears 50 times, density would be 5%.

This metric emerged from early search engine algorithms that relied heavily on keyword frequency to determine relevance. The logic seemed straightforward: pages mentioning a keyword more frequently must be more relevant to that topic. This correlation led to the widespread belief that achieving specific keyword density percentages often cited as 1-3% or 2-5% would optimize pages for better rankings.

SEO practitioners treated keyword density as a scientific formula, using specialized tools to calculate exact percentages and adjust content to hit target densities. Content creation often prioritized reaching specific keyword counts over natural writing or user value. This mechanical approach reflected search engines’ limitations at that time—they primarily matched keyword strings without understanding context, semantics, or true relevance.

The Rise and Fall of Keyword Density

Understanding keyword density’s history explains why it dominated SEO thinking and why it eventually lost relevance.

The early search era (1990s-early 2000s) saw primitive algorithms that relied heavily on keyword matching. Search engines like early Google, Yahoo, and AltaVista evaluated relevance primarily through keyword frequency and placement. Pages that repeated keywords more often generally ranked higher, making keyword density a legitimate optimization factor. SEOs quickly learned to exploit this, leading to increasingly aggressive keyword repetition.

The keyword stuffing problem emerged as website owners pushed keyword density to extremes, creating barely readable content that repeated keywords unnaturally just to manipulate rankings. Pages like “Buy cheap shoes. If you want to buy cheap shoes, our cheap shoes store has cheap shoes for sale. Cheap shoes available now” proliferated, creating terrible user experiences while gaming rankings.

Algorithm evolution responded to manipulation through increasingly sophisticated updates. Google’s algorithms began incorporating semantic understanding, recognizing synonyms and related terms, evaluating content quality beyond keyword counting, and penalizing obvious over-optimization. The Panda update (2011) specifically targeted low-quality content including keyword-stuffed pages, while subsequent updates continued reducing keyword density’s importance.

Modern search algorithms use natural language processing, machine learning, and hundreds of ranking factors that make keyword density obsolete. Current algorithms understand topic coverage, user intent, content quality, and semantic relationships far beyond simple keyword counting. They recognize that comprehensive topic coverage using varied vocabulary indicates expertise more reliably than keyword repetition.

Why Keyword Density No Longer Matters

Multiple factors have rendered keyword density irrelevant or even counterproductive in modern SEO.

Semantic search understanding means search engines recognize meaning beyond exact keyword matches. Google understands that “automobile,” “car,” and “vehicle” are semantically related, that “running shoes” and “jogging sneakers” address similar intent, and that comprehensive content about “email marketing” naturally includes terms like “newsletters,” “campaigns,” and “subscribers” without forcing exact keyword repetition.

Latent Semantic Indexing (LSI) and related technologies analyze relationships between terms and concepts, understanding topics holistically rather than through single keyword frequencies. Search engines evaluate whether content comprehensively covers topics through varied vocabulary rather than keyword repetition.

User experience prioritization in ranking algorithms means readable, engaging content outperforms keyword-optimized gibberish. Search engines increasingly measure user satisfaction through engagement signals including time on page, bounce rate, and return-to-SERP behavior. Naturally written content that serves users performs better than artificially optimized content hitting arbitrary density targets.

Over-optimization penalties actively punish excessive keyword use. While Google rarely specifies exact thresholds, pages with unnatural keyword repetition risk penalties for keyword stuffing, a practice explicitly violating Google’s quality guidelines. Pursuing specific density percentages risks crossing into penalty territory.

RankBrain and AI employ machine learning to understand content quality and relevance without relying on keyword density formulas. These systems evaluate content through sophisticated pattern recognition that considers hundreds of signals beyond simple keyword counting.

Topic comprehensiveness matters more than keyword repetition. Modern algorithms reward content that thoroughly addresses topics using varied vocabulary, incorporates related concepts, answers common questions, and demonstrates expertise through comprehensive coverage rather than keyword frequency.

What Has Replaced Keyword Density

Instead of targeting specific keyword density percentages, modern SEO focuses on more sophisticated optimization approaches.

Natural keyword usage means including target keywords where they naturally fit typically in titles, headings, first paragraph, and throughout content when genuinely relevant. There’s no magic number, just natural inclusion that serves readers.

Semantic keyword optimization incorporates related terms, synonyms, and topically related concepts that help search engines understand content comprehensively. Instead of repeating “digital marketing” 50 times, naturally incorporate related terms like “online marketing,” “internet advertising,” “content strategy,” “social media,” and “email campaigns.”

Topic modeling involves creating content that thoroughly covers subjects using varied vocabulary, addressing related questions and subtopics, and demonstrating comprehensive expertise beyond single keyword focus.

User intent satisfaction prioritizes creating content that genuinely answers user questions, solves problems, or provides value rather than mechanically hitting keyword targets. When content serves user needs effectively, keywords naturally appear in appropriate frequencies.

Content quality and engagement matter more than any keyword formula. Well-written, valuable content that keeps users engaged and satisfies their needs outperforms perfectly “optimized” content that provides poor experiences.

TF-IDF analysis (Term Frequency-Inverse Document Frequency) offers a more sophisticated alternative to simple keyword density by evaluating how important terms are to specific pages relative to their frequency across all pages, helping identify truly distinctive and relevant terms.

How to Optimize Keywords Without Obsessing Over Density

Modern keyword optimization requires strategic thinking beyond simple counting.

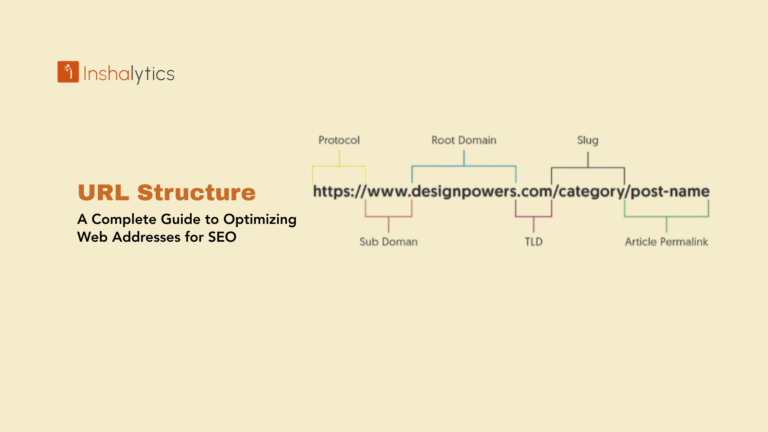

Place keywords strategically in high-impact locations including page titles (H1 tags), title tags for search results, meta descriptions, URL slugs when natural, first 100 words of content, and subheadings where relevant. Strategic placement matters more than overall frequency.

Write naturally for humans first, then review to ensure target keywords appear in key locations. If content reads well and thoroughly addresses the topic, keyword usage likely falls into acceptable ranges naturally.

Use keyword variations including synonyms, related terms, and natural language variations rather than repeating exact phrases. This appears more natural, improves readability, and helps rank for related searches.

Cover topics comprehensively by addressing related questions, incorporating relevant subtopics, and demonstrating expertise through thorough content. Comprehensive coverage naturally includes keywords and related terms appropriately.

Focus on search intent by ensuring content type and format match what users actually seek when searching your target keywords. Intent satisfaction matters infinitely more than keyword density.

Test readability by reading content aloud or having others review it. If keyword usage feels forced or unnatural, reduce frequency regardless of density percentages.

Monitor user engagement metrics like time on page, bounce rate, and pages per session. These signals indicate whether content satisfies users better than any keyword density calculation.

Common Keyword Density Mistakes

Several errors stem from outdated thinking about keyword optimization.

Obsessing over exact percentages wastes time that could be spent improving content quality. No magic density number guarantees rankings or prevents penalties.

Keyword stuffing by unnaturally forcing keywords throughout content damages readability and risks penalties. If you’re inserting keywords just to hit density targets, you’re over-optimizing.

Using density tools religiously and adjusting content based on their recommendations prioritizes algorithm manipulation over user value. Use these tools for rough awareness, not precise targeting.

Sacrificing readability to achieve specific keyword frequencies creates poor user experiences that ultimately harm rankings through negative engagement signals.

Ignoring synonyms and related terms by only using exact-match keywords appears unnatural and misses semantic optimization opportunities.

Applying one-size-fits-all density rules across all content types ignores that different formats (blog posts, product pages, landing pages) naturally have different keyword usage patterns.

Neglecting user needs in favor of keyword formulas produces content that may technically hit density targets but fails to satisfy searchers, the ultimate ranking determinant.

When Keyword Frequency Still Matters (Sort Of)

While keyword density as a percentage target is obsolete, keyword usage still matters in limited ways.

Relevance signals require mentioning target keywords enough for search engines to confidently associate your page with those topics. Mentioning “email marketing” once in a 2,000-word article probably won’t signal strong topical focus. Using it reasonably throughout (title, subheadings, naturally in content) establishes clear relevance.

Avoiding extreme under-use means ensuring target keywords appear sufficiently to indicate topical focus. There’s a difference between natural usage and barely mentioning keywords at all.

Preventing obvious over-optimization suggests reasonable upper limits. While no specific density triggers penalties, pages with 10% keyword density (100 occurrences in 1,000 words) clearly cross into problematic territory.

The practical range for most content probably falls between 0.5-2% for primary keywords, but this should result naturally from comprehensive topic coverage rather than targeting these percentages deliberately.

Explaining Keyword Density to Clients

SEO professionals often face clients who’ve heard about keyword density and want specific targets.

Acknowledge its history by explaining that keyword density mattered in early search but modern algorithms have evolved far beyond simple keyword counting.

Emphasize user focus by framing optimization around creating valuable content that serves users rather than hitting arbitrary keyword percentages.

Replace density metrics with more meaningful KPIs like rankings improvement, organic traffic growth, engagement metrics, and conversion rates measurements that actually impact business results.

Demonstrate modern approach by showing competitor content that ranks well often doesn’t obsess over keyword density but comprehensively covers topics naturally.

Educate about risks by explaining how pursuing specific density targets can lead to over-optimization, penalties, and poor user experiences that ultimately harm rankings.

Alternative Metrics and Approaches

Several more useful metrics and approaches have replaced keyword density in sophisticated SEO strategies.

Content comprehensiveness scores evaluate how thoroughly content covers topics compared to top-ranking competitors, often using tools that analyze semantic richness.

Topical authority indicators assess whether content demonstrates expertise through comprehensive coverage, authoritative sources, and in-depth treatment.

Engagement metrics including time on page, scroll depth, and bounce rate indicate whether content satisfies users better than keyword density ever could.

Semantic keyword coverage analyzes whether content includes relevant related terms and concepts that comprehensive topic treatment naturally incorporates.

Search intent alignment evaluates whether content format and approach match what actually ranks for target keywords.

Keyword Density Tools: Limited Value

Many SEO tools still calculate keyword density, but their utility is limited.

Use them for awareness, not targets. Checking keyword density can help you notice if you’ve barely mentioned target keywords or if you’ve gone overboard with repetition.

Don’t optimize to specific percentages these tools suggest. Treat them as rough checks, not scientific formulas requiring precise adjustment.

Focus on competitive analysis aspects of these tools understanding what terms top-ranking content includes and how comprehensively they cover topics rather than exact density matching.

Prioritize quality scoring features in modern SEO tools that evaluate content comprehensiveness, readability, and topic coverage over simple density calculations.

Conclusion

Keyword density represents an outdated SEO metric from an era when search engines relied on simple keyword matching to determine relevance. While it once legitimately influenced rankings, modern algorithms using semantic understanding, natural language processing, and machine learning have rendered percentage-based keyword density optimization obsolete and potentially harmful. Obsessing over achieving specific keyword density percentages wastes time, risks over-optimization penalties, and prioritizes algorithm manipulation over user value.

Modern keyword optimization focuses on natural usage in strategic locations, comprehensive topic coverage using varied vocabulary, semantic keyword inclusion, and above all, creating content that genuinely satisfies user intent and provides value. When you write naturally for humans while ensuring target keywords appear in key locations like titles and headings, keyword frequency typically falls into acceptable ranges without deliberate density targeting.

The evolution from keyword density to topic comprehensiveness reflects search engines’ maturation toward understanding meaning and serving users rather than matching strings. Success in modern SEO comes from creating genuinely helpful content that thoroughly addresses topics, serves user needs, and naturally incorporates relevant keywords and related terms. Forget arbitrary density targets focus on content quality, user satisfaction, and comprehensive topic treatment, and keyword optimization will take care of itself naturally.