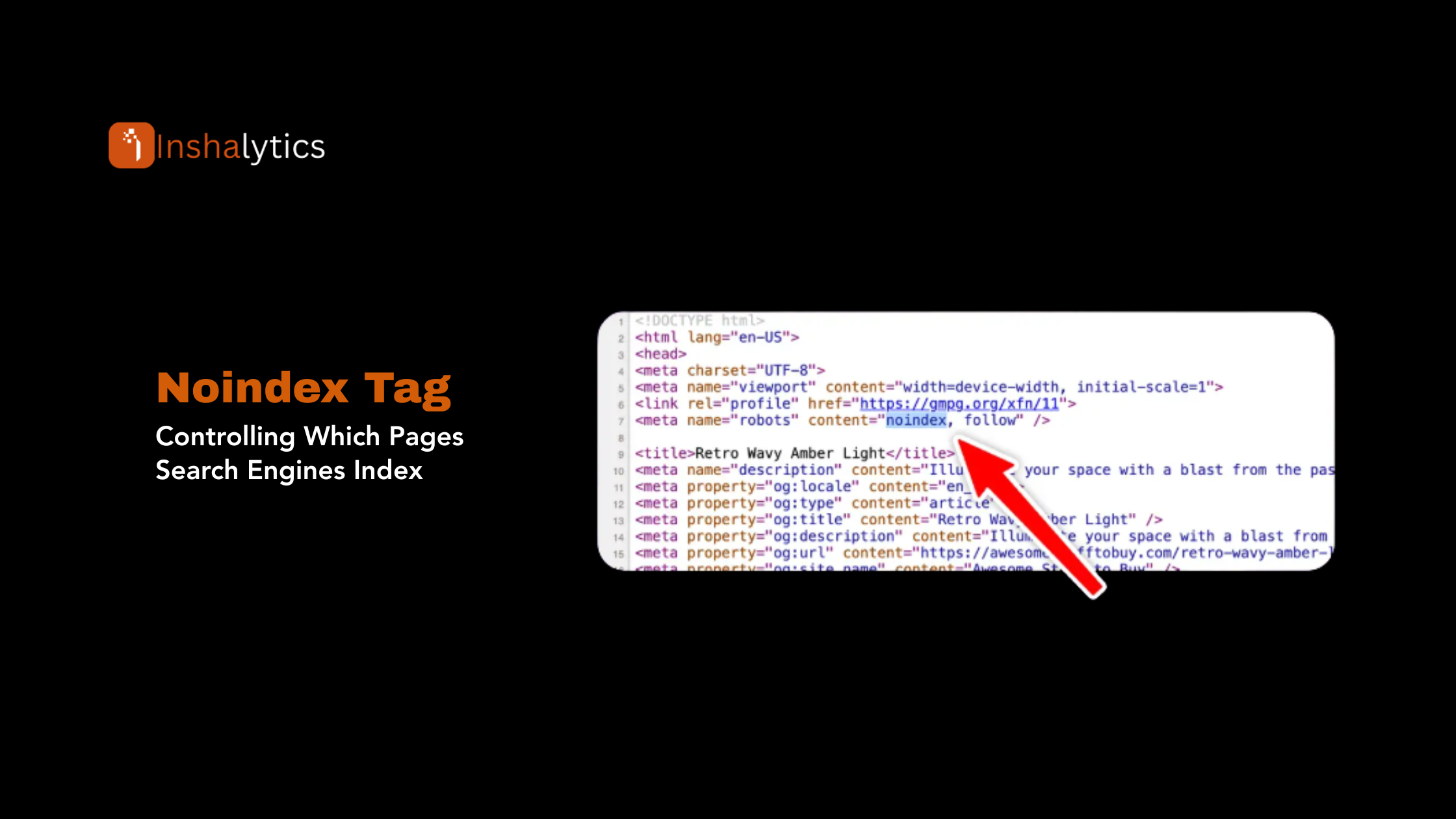

Definition: Noindex is a meta tag instruction or HTTP header directive that tells search engines not to include a page in their index. When search engine crawlers encounter a noindex directive, they will not add the page to their searchable database, preventing it from appearing in search results even if the page is crawled and its content is analyzed.

Navigate This Post

What Is the Noindex Tag?

The noindex directive explicitly instructs search engines to exclude a page from their index the massive database of web pages that powers search results. While search engines may still crawl pages with noindex tags to discover links and understand site structure, they won’t display these pages in search results for any queries. This provides webmasters precise control over which content appears in search engines versus which remains accessible only through direct navigation or internal links.

HTML meta tag implementation:

<meta name=”robots” content=”noindex”>

HTTP header implementation:

X-Robots-Tag: noindex

The noindex instruction can be combined with other directives:

<meta name=”robots” content=”noindex, follow”>

<meta name=”robots” content=”noindex, nofollow”>

How Noindex Works

Understanding the technical mechanics of noindex helps ensure proper implementation and expected outcomes.

Crawling vs. Indexing Distinction

Crawling: Search engine bots visit and download page content Indexing: Search engines add page to their searchable database

Noindex affects indexing, not crawling. Search engines may still crawl noindexed pages to:

- Discover links to other pages

- Understand site architecture

- Detect changes to noindex status

- Analyze content for other purposes

Implementation Methods

Meta robots tag in HTML <head> section:

<head>

<meta name=”robots” content=”noindex”>

</head>

X-Robots-Tag HTTP header useful for non-HTML files:

X-Robots-Tag: noindex

Robots.txt cannot implement noindex. If robots.txt blocks crawling, search engines cannot see noindex tags, potentially causing unintended indexation issues.

Processing Timeline

- Search engine crawls page

- Detects noindex directive in HTML or header

- Removes page from index (if previously indexed)

- Page no longer appears in search results

- Crawler may continue visiting to check status changes

Deindexation isn’t instantaneous it may take days or weeks for pages to fully disappear from search results after adding noindex.

When to Use Noindex

Strategic noindex implementation prevents low-value pages from cluttering search results while preserving access for legitimate users.

Duplicate Content

Printer-friendly versions: Noindex alternate formats preventing duplicate content issues Parameter-driven pages: Filtered, sorted, or searched results creating infinite variations Session ID URLs: Dynamic URLs including session identifiers Paginated series: Subsequent pagination pages beyond page one

Low-Value Pages

Thank-you pages: Conversion confirmation pages offering no search value Internal search results: Site search pages creating thin, duplicate-like content Shopping cart pages: E-commerce process pages that shouldn’t rank Login/registration pages: Functional pages without informational value Admin pages: Backend or administrative sections

Temporary Pages

Limited-time promotions: Campaign landing pages with expiration dates Event pages: Past event pages no longer relevant Seasonal content: Out-of-season pages until they become relevant again

Development and Staging

Staging environments: Testing sites that shouldn’t appear in search Development versions: Work-in-progress content Test pages: Quality assurance or experimental pages

Thin or Problematic Content

Automatically generated pages: Template-driven pages without unique value User-generated content hubs: Directory pages without substantial content Affiliate pages: Pure affiliate content adding minimal value

When NOT to Use Noindex

Several situations seem to warrant noindex but actually require different solutions.

Pages Blocked by Robots.txt

If robots.txt prevents crawling, search engines can’t see noindex tags. This creates problems when pages have external links—Google may index the URL without content understanding because it cannot access the page to read noindex instructions.

Solution: Allow crawling but use noindex tags, or remove pages entirely if neither crawling nor indexing is desired.

Important Pages You Want Ranked

Accidentally noindexing important pages homepage, key product pages, popular content represents a catastrophic SEO error causing immediate traffic losses.

Prevention: Implement systematic pre-launch checklists ensuring noindex tags from development don’t reach production.

Temporary Content Issues

If page content needs improvement but you want it indexed eventually, don’t use noindex. Instead:

- Improve content quality

- Add unique value

- Enhance optimization

Noindex should address permanent indexation decisions, not temporary content quality issues.

Site-Wide Indexation Prevention

Using noindex on every page to prevent site indexation is inefficient. Better approaches include:

- Password protection

- HTTP authentication

- Robots.txt disallow (with understanding of limitations)

Noindex vs. Other Indexation Controls

Understanding distinctions between various indexation control methods prevents confusion and misapplication.

Noindex vs. Robots.txt

Robots.txt:

- Prevents crawling

- Search engines can’t see page content

- URLs with external links might still get indexed without content

- Site-level or directory-level control

Noindex:

- Allows crawling but prevents indexing

- Search engines can see content and follow links

- More precise page-level control

- Requires crawling to be effective

Noindex vs. Canonical Tags

Canonical tags:

- Indicate preferred version of duplicate content

- All versions remain indexable but one is prioritized

- Consolidates ranking signals

Noindex:

- Completely prevents indexing

- Page won’t appear in search results

- More definitive exclusion

Use canonical tags when pages are similar but should have one preferred version. Use noindex when pages shouldn’t be indexed at all.

Noindex vs. 404/410 Status Codes

404 (Not Found) or 410 (Gone):

- Pages don’t exist or are permanently removed

- Return error status codes

- Eventually get removed from index

Noindex:

- Pages exist and function normally

- Accessible to users via direct links

- Simply excluded from search results

Use status codes for removed content, noindex for content that exists but shouldn’t be searchable.

Common Noindex Mistakes

Several frequent errors undermine SEO performance through improper noindex implementation.

Accidentally Noindexing Important Pages

Mistake: Development noindex tags remain on production sites after launch.

Impact: Catastrophic traffic loss as key pages disappear from search results.

Prevention:

- Implement launch checklists

- Use environment-specific configurations

- Audit meta tags before going live

- Set up monitoring alerts for unexpected noindex

Blocking Noindex Pages with Robots.txt

Mistake: Using robots.txt to prevent crawling of pages that need noindex.

Impact: Search engines can’t see noindex tags, potentially causing indexation anyway.

Solution: Allow crawling but implement noindex, or remove pages entirely.

Noindex on Paginated Series

Mistake: Noindexing all pagination pages except page one.

Impact: Loses long-tail traffic opportunities and prevents content discovery.

Better approach: Allow indexing but use rel=”prev”/rel=”next” or self-referencing canonicals.

Inconsistent Signals

Mistake: Noindexing pages while also submitting them in XML sitemaps.

Impact: Confusing signals to search engines.

Solution: Remove noindexed pages from sitemaps or remove noindex tags.

Over-Using Noindex

Mistake: Noindexing pages unnecessarily, overly aggressive about content quality.

Impact: Reduces organic search footprint, missing traffic opportunities.

Better approach: Improve content quality rather than hiding it.

Checking Noindex Status

Multiple methods identify whether pages include noindex directives.

Manual Inspection

View page source:

- Right-click page and select “View Page Source”

- Search for “noindex” in source code

- Check <head> section for meta robots tags

Inspect element:

- Right-click page and select “Inspect”

- Navigate to <head> section

- Look for meta robots tags

Browser Extensions

SEO Minion: Displays indexation status including noindex META SEO Inspector: Shows all meta tags including robots directives Detailed SEO Extension: Provides comprehensive on-page SEO data

SEO Tools

Screaming Frog SEO Spider: Crawl entire sites identifying noindexed pages Sitebulb: Provides detailed indexation analysis and issues Google Search Console: URL Inspection tool shows whether Google can index specific URLs

Search Console URL Inspection

- Enter URL in URL Inspection tool

- Check “Coverage” section

- Look for “Excluded by ‘noindex’ tag” status

Implementing Noindex Strategically

Strategic noindex implementation balances indexation control with maximizing legitimate organic visibility.

Create Indexation Strategy

Audit all pages categorizing by:

- Must index (key content)

- Should index (valuable content)

- Consider indexing (marginal value)

- Should NOT index (low value, duplicate, functional)

Document decisions explaining why specific pages use noindex.

Implement systematically across page types with clear policies.

Platform-Specific Implementation

WordPress: Use SEO plugins (Yoast, Rank Math) with built-in noindex controls.

Shopify: Add noindex through theme customization or apps.

Custom CMS: Implement programmatically based on page attributes or categories.

Static sites: Manually add meta tags to page templates.

Monitor Impact

Track indexed pages in Google Search Console over time.

Monitor organic traffic for unexpected drops indicating accidental noindex.

Review regularly ensuring indexation strategy remains appropriate as site evolves.

Recovery from Accidental Noindex

If important pages were accidentally noindexed:

- Remove noindex tags immediately

- Submit URLs for re-crawling via URL Inspection tool

- Update XML sitemap ensuring pages are included

- Monitor reindexation which may take several days to weeks

- Analyze traffic impact and document lessons learned

Noindex and Mobile-First Indexing

Mobile-first indexing considerations affect noindex implementation.

Ensure consistency between mobile and desktop versions mismatched noindex tags between versions creates confusion.

Test mobile rendering to verify noindex tags appear correctly on mobile-rendered pages.

Prioritize mobile implementation since Google primarily uses mobile versions for indexing.

Advanced Noindex Techniques

Sophisticated implementations handle complex scenarios.

Conditional Noindex

Implement noindex based on:

- User authentication status

- Geographic location

- Date/time (for time-sensitive content)

- User agent (cautiously and legitimately)

Temporary Noindex

Temporarily noindex content that will become valuable later:

- Upcoming event pages before event details finalize

- Seasonal content out of season

- Products not yet available for purchase

Partial Site Noindex

Noindex entire sections systematically:

- Blog category archives if thin

- Tag pages with minimal content

- Filtered e-commerce views creating duplicates

Conclusion

The noindex directive provides precise control over which pages appear in search engine indexes, allowing webmasters to prevent low-value, duplicate, or functional pages from cluttering search results while maintaining their utility for direct access. Implemented through meta tags or HTTP headers, noindex tells search engines to exclude pages from their searchable databases even while allowing continued crawling for link discovery and site structure understanding.

Strategic noindex use targets duplicate content variations, low-value functional pages, temporary content, and development environments preventing these pages from competing with valuable content for rankings. However, careful implementation is essential, as accidentally noindexing important pages causes catastrophic traffic losses, while blocking noindexed pages with robots.txt prevents search engines from seeing noindex instructions, creating indexation confusion.

Success requires systematic indexation strategy documenting which pages should and shouldn’t be indexed, regular auditing to catch accidental noindex errors, monitoring of indexed page counts and organic traffic, and clear understanding of when noindex is appropriate versus when other solutions like canonical tags, content improvement, or page removal better address underlying issues. When used properly as part of comprehensive technical SEO strategy, noindex ensures search engines focus on your best content while functional and duplicate pages remain accessible to users without diluting your organic search presence.

Key Takeaways

- Noindex prevents indexing but allows crawling and link discovery

- Implemented via meta tags or HTTP headers in page <head>

- Essential for duplicate content, low-value pages, and functional pages

- Never block noindexed pages with robots.txt search engines can’t see the directive

- Accidentally noindexing important pages causes severe traffic loss

- Regular audits prevent development noindex tags from reaching production

- Strategic implementation balances indexation control with organic visibility

- Monitor indexed page counts and traffic to catch unintended noindex issues