Few topics in search engine optimization generate as much confusion and anxiety as duplicate content. Myths and misconceptions abound: some believe that any repeated text triggers severe penalties, while others dismiss duplicate content concerns entirely. The truth lies somewhere between these extremes. Understanding what duplicate content actually is, when it becomes problematic, and how to address it properly is essential for maintaining healthy search visibility and avoiding self-inflicted SEO wounds.

Navigate This Post

What Is Duplicate Content?

Duplicate content refers to identical or very similar content appearing on multiple pages or websites, which can cause ranking issues. This duplication can occur within a single website (internal duplicate content) or across different domains (external duplicate content). The key characteristic is substantial similarity—not just a repeated phrase or paragraph, but significant portions of text that appear in multiple locations.

Search engines face a fundamental challenge when encountering duplicate content: which version should they show in search results? When multiple pages contain essentially the same information, displaying all versions wastes valuable search result space and creates a poor user experience. Search engines must choose which version to rank, potentially ignoring the others entirely or splitting ranking signals among duplicates in ways that weaken all versions.

It’s crucial to dispel the most persistent myth immediately: Google does not impose a “duplicate content penalty” in most cases. Unless duplicate content is clearly manipulative or deceptive, you won’t be penalized. However, duplicate content does create ranking problems because search engines filter out redundant results, meaning your duplicate pages may simply not appear in search results rather than being actively penalized.

Types of Duplicate Content

Duplicate content manifests in various forms, each presenting unique challenges and requiring different solutions.

Internal duplicate content occurs when multiple pages within your own website contain the same or substantially similar content. This often happens accidentally through technical issues, site architecture decisions, or content management practices. Examples include product descriptions appearing on multiple category pages, printer-friendly versions of articles, or content accessible through multiple URL parameters.

External duplicate content exists when content from your site appears on other domains, or when you’ve republished content from other sources. This might result from content syndication, scrapers copying your content without permission, or your own republication of articles across multiple sites you control.

Near-duplicate content includes pages that are extremely similar but not identical. These might be product pages for similar items with only minor specification differences, location-based pages with templated content and only city names changed, or articles covering similar topics with significant text overlap.

Legitimate duplicate content serves valid purposes but still requires proper handling. This includes quoted material with attribution, product descriptions provided by manufacturers, legal disclaimers appearing across multiple pages, or legitimate syndication with proper canonical implementation.

Common Causes of Duplicate Content

Understanding how duplicate content emerges helps you prevent and address these issues proactively.

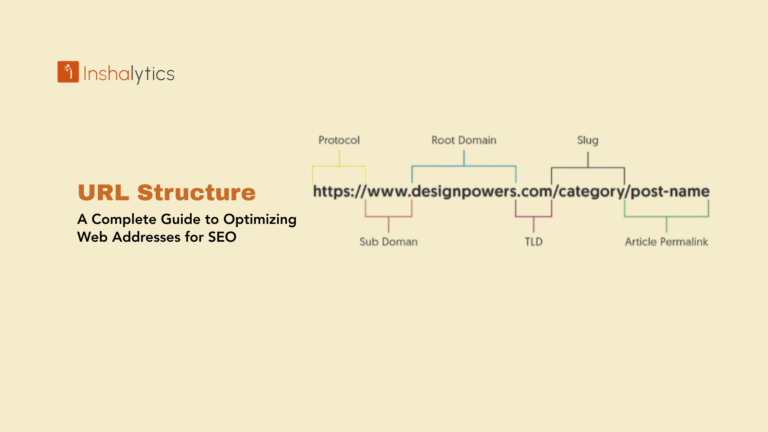

URL variations create one of the most common duplicate content problems. A single page might be accessible through multiple URLs due to technical configurations:

- HTTP vs. HTTPS versions

- www vs. non-www subdomains

- Trailing slash variations (example.com/page vs. example.com/page/)

- Parameter-based URLs create multiple paths to identical content

- Mobile-specific URLs are duplicating desktop content

E-commerce issues proliferate duplicate content through product descriptions copied from manufacturers that appear on thousands of competitor sites, products appearing in multiple categories, filtered or sorted product listings creating new URLs, and similar products with nearly identical descriptions.

Content management system issues can generate duplicates through pagination, creating multiple paths to content, print versions duplicating standard pages, comment pagination creating duplicate page versions, and tag or category archives showing full article text.

Session IDs and tracking parameters appended to URLs create infinite duplicate versions of the same page. URLs like example.com/page?sessionid=12345 and example.com/page?sessionid=67890 show identical content but appear as different pages to search engines.

Scraped or syndicated content creates external duplicates when other sites copy your content without permission or when you syndicate articles to multiple platforms without proper canonicalization.

Printer-friendly and mobile versions traditionally created duplicate content, though responsive design has largely eliminated this issue. Legacy sites might still maintain separate URLs for different devices or printing.

Boilerplate content like sidebars, footers, disclaimers, or repeated sections can create similarity between pages even when main content differs. While not typically problematic, extensive boilerplate relative to unique content can cause issues.

Why Duplicate Content Causes Problems

While not typically triggering penalties, duplicate content undermines SEO performance in several significant ways.

Diluted ranking signals occur when backlinks, social shares, and other authority signals split across multiple duplicate URLs. Instead of one page accumulating strong signals, several weak duplicates divide the value, reducing the ranking potential of all versions.

Crawl budget waste happens when search engine crawlers spend time downloading and analyzing duplicate pages instead of discovering new or updated content. For large sites, this inefficiency can delay indexation of important pages.

Wrong version ranking frustrates webmasters when search engines choose to rank a less desirable duplicate over the preferred version. You might want your HTTPS version to rank, but if Google discovers the HTTP version first, it might rank that instead.

Indexation confusion prevents search engines from understanding your site structure and content organization when duplicates create apparent redundancy. This confusion can suppress rankings across your entire site.

User experience degradation occurs when search results show multiple nearly-identical listings from your site, making your brand appear spammy and wasting opportunities to showcase diverse content.

Competitive disadvantage emerges when competitors with unique content outrank your duplicate content, even if your original version appeared first. Search engines increasingly favor original, unique content over rehashed material.

How Search Engines Handle Duplicate Content

Understanding search engine perspectives on duplication helps you implement effective solutions.

Content filtering represents the primary mechanism search engines use to address duplicates. Rather than showing all versions, they select one “canonical” version to display in search results while filtering out the others. This filtering is automatic and happens without penalties.

Clustering and canonicalization group duplicate pages together, selecting the best representative. Search engines use signals like URL structure, internal linking, canonical tags, and historical data to choose which version to show.

PageRank and authority consolidation attempts to attribute link equity and authority signals to the version search engines determine is canonical. However, when duplicates exist without clear canonicalization signals, these valuable metrics may split inefficiently.

Pattern recognition helps search engines identify technical causes of duplication, such as parameter-based URLs or protocol variations, allowing them to handle these systematically without requiring page-by-page evaluation.

Identifying Duplicate Content Issues

Several tools and techniques help you discover duplicate content problems on your site.

Google Search Console provides duplicate content reports showing pages Google considers duplicates and which versions it has selected as canonical. The Coverage report identifies “Duplicate, submitted URL not selected as canonical” issues that reveal when Google chooses a different version than you specified.

Site: searches in Google reveal how many pages from your site are indexed. Compare this to your known page count. Significant discrepancies might indicate duplicate content issues causing pages to be filtered out.

Copyscape and plagiarism checkers identify external duplicate content by searching the web for copies of your content on other domains. This helps you discover scrapers or unauthorized syndication.

Screaming Frog and similar crawlers analyze your entire site to identify internal duplicates, near-duplicates, and pages with similar content. These tools can detect duplicate title tags, meta descriptions, and body content.

Manual content sampling involves examining several pages to identify patterns of duplication. Look for repeated blocks of text, similar product descriptions, or templated content with minimal unique information.

Solutions for Duplicate Content

Addressing duplicate content requires strategic implementation of several technical solutions.

Canonical tags represent the most powerful tool for managing duplicate content. By adding <link rel=”canonical” href=”https://example.com/preferred-url”> to duplicate pages, you tell search engines which version to treat as authoritative. Use canonical tags when duplicates must remain accessible to users but you want search engines to consolidate around one version.

301 redirects permanently redirect duplicate URLs to your preferred version. Unlike canonicals, redirects also send users to the canonical page. Use redirects when duplicate URLs serve no purpose and can be eliminated entirely, such as consolidating HTTP to HTTPS or www to non-www variations.

Parameter handling in Google Search Console lets you tell Google how to treat URL parameters. Mark parameters as not affecting content (like tracking parameters) so Google treats URLs with and without these parameters as the same page.

Robots.txt blocking prevents crawlers from accessing duplicate pages entirely. Use this carefully—blocked pages cannot be indexed, which is appropriate for some duplicates but prevents passing any link equity from pages that are blocked.

Meta noindex tags allow pages to remain accessible to users and be crawled but instruct search engines not to include them in search results. This works well for filtered product views or sort options that create duplicate content.

Consistent internal linking to your preferred URLs reinforces which versions you consider canonical. Always link to HTTPS versions, include or exclude trailing slashes consistently, and use your preferred subdomain (www or non-www) throughout your site.

Rewrite unique content for pages that serve similar purposes. Instead of using identical manufacturer descriptions across multiple product pages, create unique descriptions highlighting different features or use cases.

Syndication best practices include requiring canonical tags pointing to your original content when syndicating to other sites, waiting before syndicating to ensure search engines index your original first, and adding unique introductions or conclusions to syndicated versions.

Preventing Duplicate Content

Proactive measures prevent duplicate content problems before they emerge.

Implement proper URL structure from the start. Choose either www or non-www, HTTPS or HTTP (always choose HTTPS), and trailing slash or no trailing slash, then enforce these choices consistently through redirects and canonicals.

Use responsive design instead of separate mobile URLs to avoid creating duplicate content across devices.

Consolidate similar pages rather than creating near-duplicates. One comprehensive page typically outperforms multiple thin pages covering similar topics.

Create truly unique product descriptions for e-commerce, even when selling the same products as competitors. Unique descriptions differentiate your site and avoid the duplicate content that manufacturer descriptions create.

Control session IDs and tracking parameters by using cookies instead of URL parameters for session management, implementing proper parameter handling, and ensuring analytics parameters don’t create indexable duplicate pages.

Monitor for scrapers by setting up Google Alerts for your unique content phrases and using tools like Copyscape to identify unauthorized copies. When you find scrapers, request removal or file DMCA complaints for egregious cases.

When Duplicate Content Doesn’t Matter

Not all duplication causes problems, and understanding these exceptions prevents unnecessary worry.

Short repeated elements like navigation menus, footers, or sidebars don’t constitute problematic duplicate content. Search engines understand these elements appear across pages and focus on unique main content.

Quoted material with attribution is explicitly not duplicate content. Properly attributed quotes serve legitimate purposes and don’t trigger issues.

Local business pages with similar structures but different specific information (location, phone number, hours) are acceptable if the business legitimately operates multiple locations. However, adding unique content about each location improves their performance.

Intentional syndication with proper canonicalization doesn’t cause problems when implemented correctly with canonical tags pointing to originals.

Conclusion

Duplicate content represents one of the most misunderstood aspects of search engine optimization. While not typically triggering penalties, duplicate content wastes crawl budget, dilutes ranking signals, and creates indexation confusion that undermines SEO performance. Understanding the difference between problematic duplication and harmless repetition helps you focus efforts appropriately.

Most duplicate content issues stem from technical configurations rather than intentional manipulation. URL variations, e-commerce architectures, and content management systems create duplicates accidentally. The good news is that solutions like canonical tags, 301 redirects, and proper URL parameter handling effectively address these issues when implemented correctly.

The key to managing duplicate content lies in consolidating similar content when possible, clearly indicating preferred versions through technical signals when duplicates must exist, and creating genuinely unique content that provides distinct value. Focus on serving users with the best possible content organized logically, and implement technical solutions to ensure search engines understand your structure and preferences.

By addressing duplicate content proactively through proper site architecture, canonical implementation, and unique content creation, you eliminate a common obstacle to SEO success and create clearer pathways for search engines to discover, understand, and rank your most important content.