Creating excellent content means nothing if search engines can’t access, understand, and include it in their indexes. Indexability the technical capability that determines whether search engines can discover, crawl, and add your pages to their databases represents one of the most fundamental yet frequently overlooked aspects of SEO. Before worrying about rankings, keyword optimization, or backlinks, you must ensure your pages are actually indexable. Understanding what affects indexability and how to diagnose and fix indexability issues forms the foundation of any successful SEO strategy.

Navigate This Post

What Is Indexability?

Indexability refers to a page’s ability to be crawled and added to a search engine’s index. An indexable page meets all technical requirements allowing search engine crawlers to access it, process its content, and store it in the search database where it becomes eligible to appear in search results. Conversely, non-indexable pages face barriers either intentional or accidental that prevent search engines from including them in their indexes.

Indexability operates as a binary state: pages are either indexable or they’re not. However, the path to indexability involves multiple technical factors that must align correctly. A single misconfiguration in robots.txt, an errant noindex tag, or server accessibility issues can render even the highest-quality content invisible to search engines.

The distinction between indexability and actual indexation matters. Indexable pages can be indexed but haven’t necessarily been discovered or added to the index yet. Non-indexable pages cannot be indexed regardless of quality or discoverability. Your goal is ensuring important pages are indexable, then working to ensure they actually get indexed through proper discovery mechanisms and quality content.

Technical Factors Affecting Indexability

Multiple technical elements determine whether pages are indexable, each creating potential barriers if misconfigured.

Robots.txt directives provide the first line of indexability control. This file, located at your domain root (example.com/robots.txt), tells search engine crawlers which parts of your site they can access. Pages blocked by robots.txt cannot be crawled, and therefore cannot be indexed with full content understanding, though they might appear in search results with limited information if linked from elsewhere.

Example blocking directive:

User-agent: *

Disallow: /admin/

Disallow: /private/

Meta robots tags in HTML provide page-level indexability instructions. The noindex directive explicitly tells search engines not to include a page in their index:

<meta name=”robots” content=”noindex”>

X-Robots-Tag HTTP headers offer similar functionality for non-HTML files like PDFs or images:

X-Robots-Tag: noindex

Server accessibility fundamentally affects indexability. Pages must return proper HTTP status codes (200 for success, 301 for redirects) rather than errors. Server errors (5xx status codes), DNS failures, or timeout issues prevent crawling and therefore indexation.

Authentication requirements like login walls or paywalls limit indexability unless you implement proper structured data or use methods like First Click Free (now deprecated) that allowed limited access to paywalled content.

JavaScript rendering can impact indexability when critical content only appears after JavaScript execution. While modern search engines can render JavaScript, delays, errors, or complex implementations may prevent proper content extraction.

Canonical tags don’t prevent indexability but signal which version of duplicate or similar pages should be indexed. Pages with canonical tags pointing to other URLs may be crawled but typically aren’t indexed, with preference given to the canonical version.

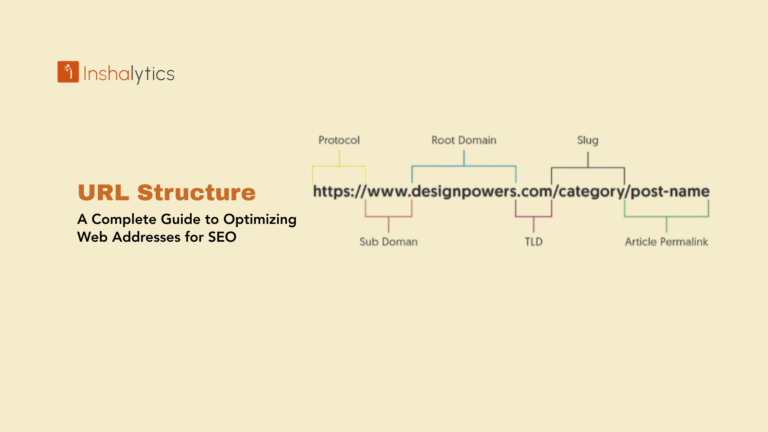

URL structure and accessibility affect whether crawlers can discover and access pages. Orphaned pages without internal links, pages requiring form submissions, or URLs embedded in JavaScript may have discoverability issues affecting practical indexability.

HTTPS and security have become baseline requirements. While HTTP pages remain technically indexable, Google strongly prefers HTTPS pages and may display warnings affecting user access to non-secure pages.

Intentional vs. Unintentional Non-Indexability

Understanding the difference between purposeful indexability blocking and accidental barriers helps you manage your site strategically.

Intentional non-indexability serves legitimate purposes:

- Administrative pages like login, checkout, or user account areas

- Duplicate content variations where canonical versions should be indexed instead

- Thank you pages or confirmation pages providing no search value

- Filtered or sorted product views creating infinite URL variations

- Staging or development environments that shouldn’t appear in search

- Paid or member-only content you don’t want publicly searchable

Unintentional non-indexability creates serious SEO problems:

- Important pages accidentally blocked by robots.txt

- Noindex tags left on production pages from development/staging

- Server configuration issues preventing crawler access

- JavaScript errors preventing content rendering

- Broken internal linking leaving pages orphaned

- Redirect chains or loops confusing crawlers

- Mobile-specific issues in mobile-first indexing era

The key is ensuring intentional blocking serves strategic purposes while identifying and fixing unintentional barriers preventing important pages from being indexed.

Diagnosing Indexability Issues

Several tools and techniques help identify what’s preventing pages from being indexable.

Google Search Console’s URL Inspection tool provides authoritative information about specific URLs. Enter any URL to see whether Google can crawl and index it, why indexation might be prevented, when Google last crawled the page, and any specific issues detected.

Common URL Inspection findings:

- “URL is on Google” = indexed successfully

- “URL is not on Google: Blocked by robots.txt” = accessibility issue

- “URL is not on Google: Excluded by ‘noindex’ tag” = meta robots issue

- “Page with redirect” = redirect preventing indexation of this URL

- “Submitted URL returned soft 404” = page appears empty or low-quality

Coverage report in Search Console shows indexability status across your entire site, categorizing pages as:

- Valid (indexed successfully)

- Valid with warnings (indexed but with issues)

- Error (problems preventing indexation)

- Excluded (not indexed, often intentionally)

Robots.txt testing in Search Console allows testing whether specific URLs are blocked by your robots.txt file. This identifies unintentional blocks before they cause problems.

View page source manually by right-clicking pages and selecting “View Page Source” to check for noindex tags in the HTML head section.

Check HTTP headers using browser developer tools (Network tab) or online tools to identify X-Robots-Tag directives or other header-level indexability controls.

Crawl your site with tools like Screaming Frog SEO Spider to identify indexability issues at scale, including noindex pages, redirect chains, orphaned pages, and broken links.

Lighthouse audits in Chrome DevTools evaluate technical SEO factors including some indexability elements like robots.txt blocking and basic crawlability.

Common Indexability Problems

Several issues frequently prevent pages from being indexable despite owners wanting them in search results.

Development noindex tags in production represent one of the most common problems. Developers add noindex during staging to prevent test sites from being indexed, then forget to remove these tags when launching. Always audit meta robots tags before going live.

Overly aggressive robots.txt blocks important sections of sites unintentionally. Common mistakes include blocking CSS and JavaScript files that crawlers need to render pages properly, blocking entire sections that should be partially accessible, or using wildcard patterns that inadvertently block more than intended.

Canonical tag misconfigurations where pages point to non-existent URLs or create circular canonical loops prevent proper indexation. Each page should either have a self-referencing canonical or point to an existing, accessible canonical URL.

Redirect chains frustrate crawlers and waste crawl budget. Pages that redirect multiple times (A → B → C → D) may not get indexed. Implement direct redirects (A → D) instead.

Server configuration errors including incorrect 404 implementations (soft 404s), server errors from overwhelmed resources, or misconfigured security settings blocking legitimate crawlers prevent indexation.

JavaScript-dependent content where essential elements only appear after complex JavaScript execution may not be properly indexed if crawlers can’t render it correctly. Test with URL Inspection’s “Test Live URL” feature to see how Google renders your pages.

Orphaned pages without any internal links never get discovered by crawlers following links through your site. Ensure important pages receive links from at least one other page on your site.

Pagination issues where paginated series break crawlability through incorrect implementation of rel=”next” and rel=”prev” (now deprecated) or inadequate linking between pages can fragment content discovery.

Improving Indexability

Systematic improvements ensure your important pages can be discovered, crawled, and indexed.

Audit robots.txt thoroughly to verify you’re not blocking important pages or resources. Test critical URLs with Search Console’s robots.txt tester. Consider using minimal robots.txt that only blocks truly private or duplicate content.

Remove unnecessary noindex tags from pages you want indexed. Implement systematic pre-launch checklists ensuring development-phase noindex tags don’t make it to production.

Fix server errors that prevent crawler access. Monitor uptime, ensure adequate server resources, and resolve 5xx errors promptly. Pages must be accessible to be indexable.

Implement proper internal linking ensuring every important page receives links from other pages on your site. Create logical site architecture with clear paths from homepage to deep content.

Optimize for JavaScript rendering by ensuring critical content exists in HTML before JavaScript execution when possible. Use server-side rendering or progressive enhancement for important content rather than pure client-side rendering.

Establish XML sitemaps listing all indexable pages and submit them through Search Console. Sitemaps help crawlers discover pages efficiently, especially on large sites or sites with complex architecture.

Use canonical tags correctly by ensuring they point to valid, accessible URLs (preferably the page’s own URL for most pages). Avoid canonical chains or broken canonical references.

Simplify redirects by eliminating chains and ensuring redirects point directly to final destinations. Use 301 redirects for permanent moves rather than JavaScript or meta refresh redirects.

Monitor Search Console regularly for new indexability issues. Set up email alerts for critical errors and review the Coverage report frequently to catch problems early.

Test before deploying changes that might affect indexability. Major site changes, CMS updates, or hosting migrations should include indexability testing before going live.

Indexability and Site Migrations

Site migrations present special indexability risks requiring careful management.

Pre-migration audit should verify which pages are currently indexed and should remain indexable after migration. Document robots.txt configuration, noindex tags, canonical implementations, and redirect maps.

Implement redirects properly from old URLs to new equivalents, ensuring every important old URL redirects to an appropriate new page. Use 301 (permanent) redirects rather than 302 (temporary).

Update robots.txt for the new site structure, ensuring no unintentional blocks prevent crawler access to migrated content.

Remove development restrictions like authentication requirements or noindex tags before launch, or immediately after DNS switches to production.

Monitor post-migration intensively through Search Console, watching for unexpected deindexation, crawl errors, or indexability issues. The first few weeks after migration are critical for catching problems.

Maintain old domain temporarily if changing domains, keeping 301 redirects active for at least a year (preferably permanently) to ensure link equity transfer and smooth crawler transition.

Indexability for Different Page Types

Different content types face unique indexability considerations.

Product pages on e-commerce sites must balance indexability of core product pages against preventing indexation of infinite filtered, sorted, or parameter-driven variations. Use canonical tags and robots.txt strategically.

Blog posts should be fully indexable with clear URLs, proper internal linking from category pages and archives, and no inadvertent blocking preventing discovery.

Landing pages created for campaigns need indexability decisions based on whether you want organic traffic or prefer pages serve only paid traffic. Short-term campaign pages might intentionally be noindexed.

PDF documents and downloads require different indexability approaches. PDF content can be indexed, but you must decide whether you want PDFs ranking or prefer HTML versions with PDFs as downloads.

Multimedia content like videos and images benefit from hosting pages being indexable with proper structured data markup helping search engines understand multimedia content.

Strategic Indexability Management

Mature SEO strategies involve intentional indexability decisions rather than simply maximizing indexed page counts.

Prioritize important pages by ensuring your best content is fully indexable while strategically blocking low-value pages that consume crawl budget without providing search value.

Manage crawl budget on large sites by preventing indexation of duplicate content, filtered views, or administrative pages, allowing crawlers to focus on pages that matter.

Consolidate thin content rather than leaving many weak pages indexable. Merge similar topics into comprehensive resources or noindex pages that don’t merit individual presence in search results.

Create clear indexability policies documenting which page types should be indexed, which should be blocked, and why. This prevents confusion and accidental blocking during development or updates.

Review indexability regularly as your site grows and changes. Pages that were appropriately noindexed might become valuable enough to index, or previously important pages might become redundant.

Conclusion

Indexability represents the fundamental gateway to search visibility—pages that can’t be indexed can’t rank, regardless of content quality or optimization. This technical capability depends on multiple factors including robots.txt configuration, meta robots tags, server accessibility, JavaScript rendering, and URL structure. A single misconfiguration can render entire site sections invisible to search engines, making indexability auditing essential for SEO success.

Successful indexability management requires balancing two objectives: ensuring important pages can be discovered, crawled, and indexed while strategically preventing low-value pages from consuming crawl budget and cluttering search results. Use tools like Google Search Console’s URL Inspection and Coverage reports to diagnose issues, fix unintentional barriers promptly, and implement intentional blocking only where it serves strategic purposes.

Regular indexability audits, especially before and after major site changes, prevent costly visibility losses from technical errors. Monitor systematically, test thoroughly, and maintain clear documentation about indexability decisions. When you master indexability fundamentals—ensuring your best content is technically accessible to search engines—you establish the foundation upon which all other SEO efforts build. Without indexability, even the world’s best content remains invisible to searchers who need it.